o1

OpenAI finally reveals “🍓”: crazy chain-of-thought-tuned model » GPT-4o

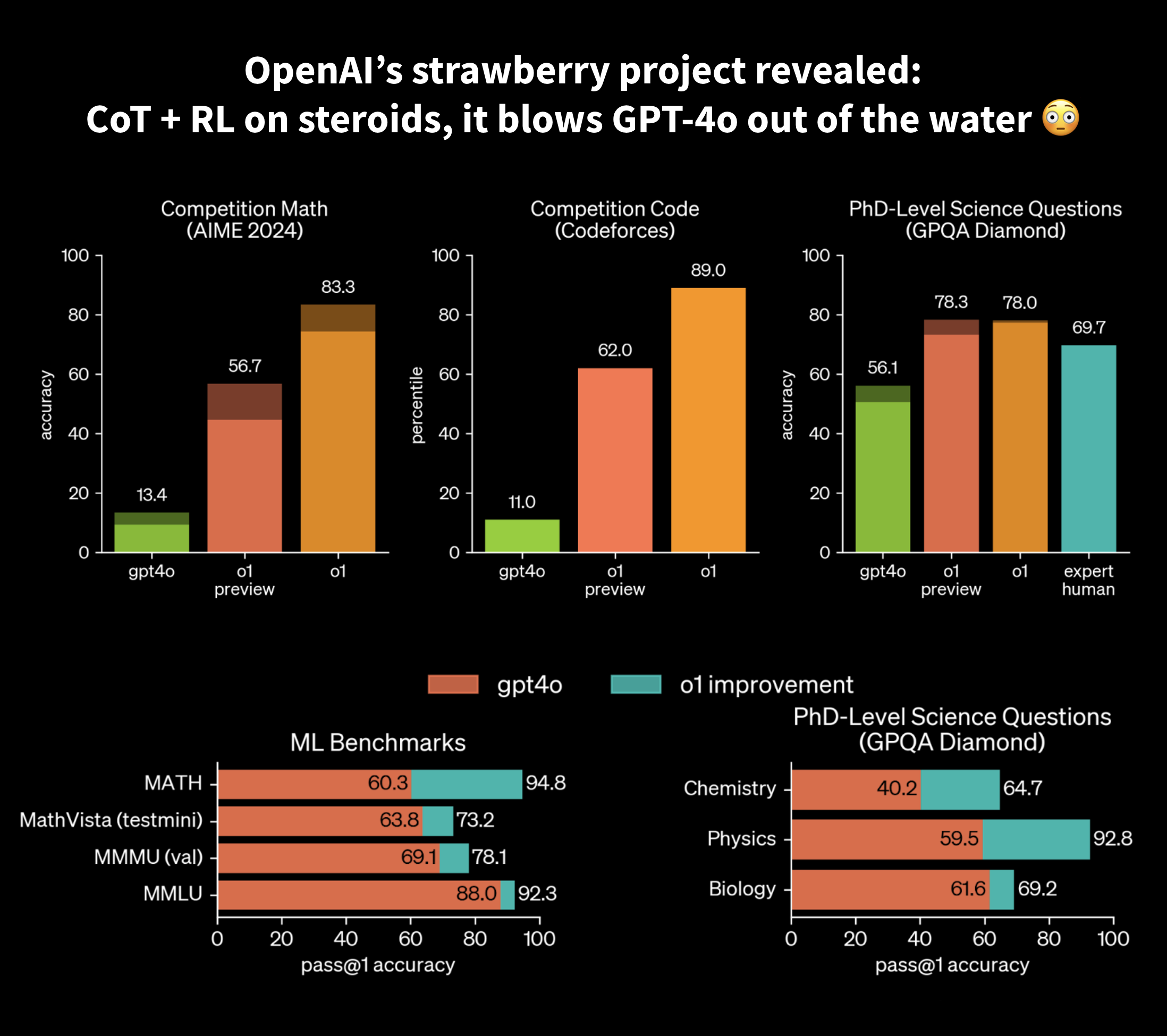

OpenAI had hinted at a mysterious “project strawberry” for a long time: they published this new model called “o1” 1hour ago, and the performance is just mind-blowing.

🤯 Places among the top 500 students in the US in a qualifier for the USA Math Olympiad

🤯 Beats human-PhD-level accuracy by 8% on GPQA, hard science problems benchmark where the previous best was Claude 3.5 Sonnet with 59.4.

🤯 Scores 78.2% on vision benchmark MMMU, making it the first model competitive w/ human experts

🤯 GPT-4o on MATH scored 60% ⇒ o1 scores 95%

How did they pull this? Sadly OpenAI keeps increasing their performance in “making cryptic AF reports to not reveal any real info”, so here are excerpts:

💬 “Similar to how a human may think for a long time before responding to a difficult question, o1 uses a chain of thought when attempting to solve a problem. Through reinforcement learning, o1 learns to hone its chain of thought and refine the strategies it uses. It learns to recognize and correct its mistakes. It learns to break down tricky steps into simpler ones. It learns to try a different approach when the current one isn’t working.”

Ok thanks a lot, that’s not helpful at all, not that I would have liked to REPRODUCE AND OPEN-SOURCE IT anyway.

And of course, they decide to hide the content of this precious Chain-of-Thought. Would it be for maximum profit? Of course not, you awful capitalist, it’s to protect users:

💬 “We also do not want to make an unaligned chain of thought directly visible to users.”

They’re right, it would certainly have hurt my feelings to see the internal of this model tearing apart math problems.

🤔 I suspect it could be not only CoT going on, but also some agentic behaviour where the model can just call a code executor. The kind of score improvement the show certainly looks like the ones you see with agents.

👉 This model will be immediately released for ChatGPT and some “trusted API users”.

Let’s start cooking to release the same thing in open-source in 6 months! 🚀