Divide your ChatGPT costs by 10 by directly calling the API

Why an UI for the GPT API?

❌ A few months ago, I canceled my ChatGPT subscription.

This tool is incredible, especially GPT4: when I use it for debugging, for example, I can save several hours per day. But a $20 per month subscription is too expensive if I don’t use it regularly.

But this summer, everything changed: GPT4 became available through OpenAI’s API.

The API is an interface that allows you to call responses from OpenAI models in programs. The real difference is that you pay according to the number of words returned by GPT4 ▶ So the payment is adapted to usage.

🛠 So I just built an interface that allows you to use the OpenAI API in a notebook.

Result: huge reduction in costs

✅ For exactly the same responses as before, my costs have dropped to less than $1 per day when I use it a lot, $0 on other days.

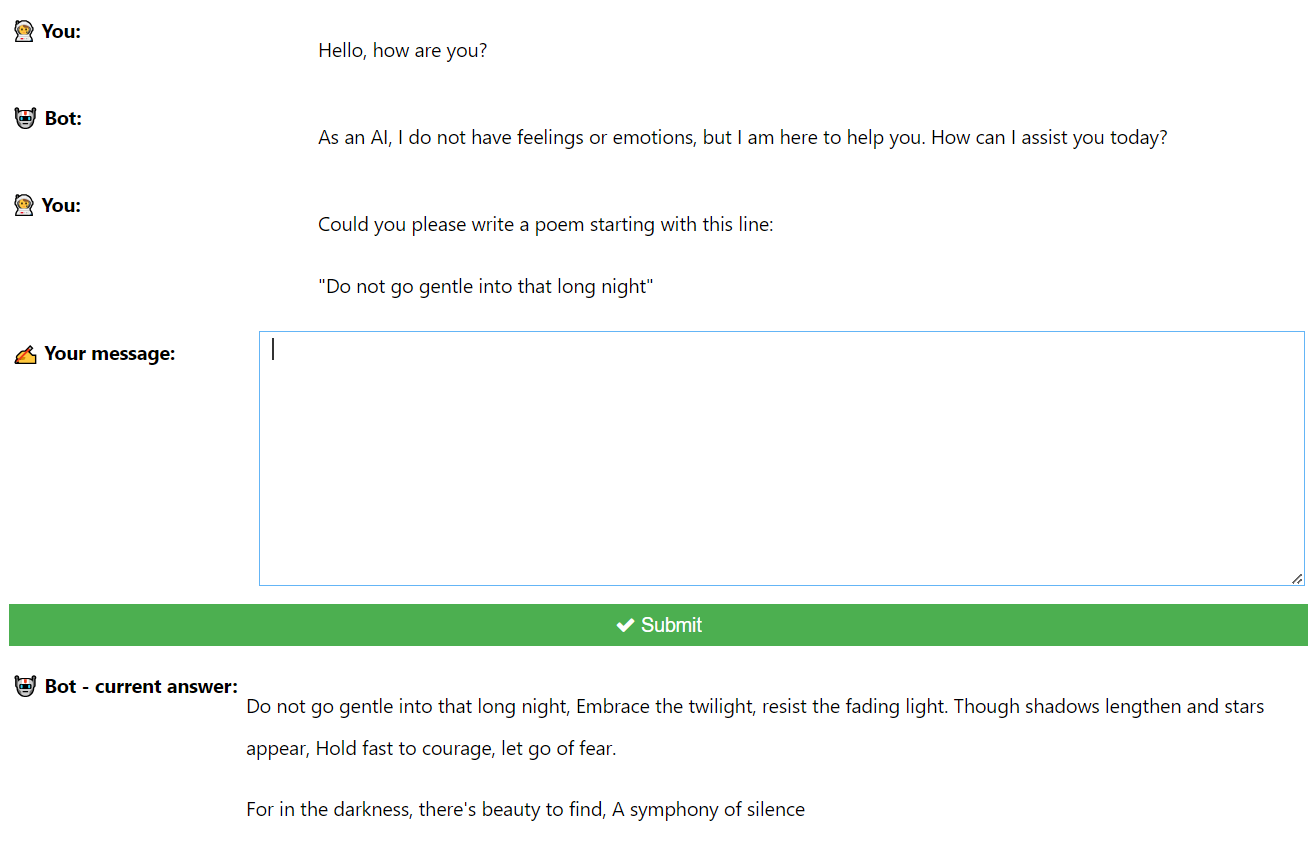

This is what it looks like:

How it's built

- The interface is made of simple IPython widgets.

The core of the machine is this get_user_input function of the Chat class. It updates, among other things, the history attribute, which is a list of messages, each message being a dictionary with the keys "role" and "content", which is a format directly accepted by openai.ChatCompletion.create().

def get_user_input(self, b) -> None:

message = self.user_input.value

self.add_message("👨🚀 You:", message)

self.history.append({"role": "user", "content": message})

response = openai.ChatCompletion.create(

model=self.model, messages=self.history, temperature=0, stream=True

)

collected_messages = []

current_reply = None

for chunk in response:

chunk_message = chunk["choices"][0].get("delta", {}).get("content")

if chunk_message is not None:

collected_messages.append(chunk_message)

current_reply = "".join(collected_messages)

self.answer.value = sanitize_code(markdown(current_reply))

self.answer.value = ""

self.add_message("🤖 Bot:", current_reply)

self.history.append({"role": "system", "content": current_reply})

return

- The

stream=Trueoption inopenai.Chatcompletionallows you to receive GPT completion live, making it much easier to interact.

To use it

Clone this repo, copy your OpenAI API key, run the notebook cell, and you’re good to go!